import matplotlib.pyplot as plt

import numpy as np

import seaborn as sns

import pandas as pd

from sklearn import datasets

from sklearn import cluster

from sklearn import svm, datasets

from sklearn.model_selection import GridSearchCV

from sklearn.model_selection import train_test_split

from sklearn import treeTrain, test and Validation data¶

We will work with the iris data again.

iris_df = sns.load_dataset('iris')

iris_X = iris_df.drop(columns=['species'])

iris_y = iris_df['species']We will still use the test train split to keep our test data separate from the data that we use to find our preferred parameters.

iris_X_train, iris_X_test, iris_y_train, iris_y_test = train_test_split(iris_X,iris_y, random_state=0)We will be doing cross validation late, but we still use train_test_split at the start that we have the true test data

Think Ahead¶

What would you need to be able to find the best parameter settings?

what would be the inputs to an algorithm to optimize a model

what might the steps include?

Setting up model optmization¶

Today we will optimize a decision tree over three parameters.

One is the criterion, which is how it decides where to create thresholds in parameters. Gini is the default and it computes how concentrated each class is at that node, another is entropy, which is a measure of how random something is. Intuitively these do similar things, which makes sense because they are two ways to make the same choice, but they have slightly different calculations.

The other two parameters relate to the structure of the decision tree that is produced and their values are numbers.

max_depthis the height of the treemin_samples_leafper leaf makes it keeps the leaf sizes small.

dt = tree.DecisionTreeClassifier()

params_dt = {'criterion':['gini','entropy'],

'max_depth':[2,3,4],

'min_samples_leaf':list(range(2,20,2))}Grid Search¶

We will first to an exhaustive optimization on that parameter grid, params_dt.

The dictionary is called a parameter grid because it will be used to create a “grid” of different values, by taking every possible combination.

The GridSearchCV object will then also do cross validation, with the same default values we saw for cross_val_score of 5 fold cross validation (Kfold with K=5).

Solution to Exercise 1 #

GridSearchCV will cross validate the model for every combination of parameter values from the parameter grid.

To compute the number of fits this means this by first getting the lengths of each list of values:

num_param_values = {k:len(v) for k,v in params_dt.items()}

num_param_values{'criterion': 2, 'max_depth': 3, 'min_samples_leaf': 9}so we have 9 for min_samples_leaf because the range is inclusive of the start and exclusive of the stop, or in math it is .

Then multiplying to get the total number of combination

combos = np.prod([v for v in num_param_values.values()])

combosnp.int64(54)We have a total of np.int64(54) combinations that will be tested and since cv=5 it will fit each of those 5 times so the total number of fit models is np.int64(270)

We will instantiate it it with default CV settings.

dt_opt = GridSearchCV(dt,params_dt)The GridSearchCV keeps the same basic interface of estimator objects, we run it with the fit method.

dt_opt.fit(iris_X_train,iris_y_train)We can also get predictions, from the model with the highest score out of all of the combinations:

y_pred = dt_opt.predict(iris_X_test)we can also score it as normal.

test_score = dt_opt.score(iris_X_test,iris_y_test)

test_score0.9473684210526315This is our true test accuracy because this data iris_X_test,iris_y_test was not used at all for training or for optimizing the parameters.

we can also see the best parameters.

dt_opt.best_params_{'criterion': 'gini', 'max_depth': 4, 'min_samples_leaf': 2}Grid Search Results¶

The optimizer saves a lot of details of its process in a dictionary

Long output

dt_opt.cv_results_{'mean_fit_time': array([0.0135787 , 0.0018209 , 0.00372229, 0.00660176, 0.01143165,

0.01574168, 0.01472335, 0.01145902, 0.00425315, 0.00362816,

0.00828924, 0.00686426, 0.00515075, 0.0105021 , 0.00528789,

0.01715474, 0.01397805, 0.01779041, 0.01271362, 0.02076735,

0.01892314, 0.01172323, 0.01070547, 0.01127853, 0.01133447,

0.01876225, 0.00812964, 0.01238108, 0.01236014, 0.01633244,

0.02057581, 0.02000394, 0.01203508, 0.01582556, 0.01441035,

0.0120677 , 0.01101003, 0.01904478, 0.01206999, 0.01258621,

0.0119401 , 0.01310878, 0.00751882, 0.01208897, 0.01084099,

0.01039805, 0.01807566, 0.00771327, 0.00631948, 0.00734081,

0.00492249, 0.010676 , 0.00855103, 0.00869517]),

'std_fit_time': array([0.01041134, 0.00014996, 0.00220572, 0.00447824, 0.00486982,

0.003489 , 0.00469126, 0.00451251, 0.00196112, 0.00230184,

0.00321886, 0.00351127, 0.00367722, 0.00533254, 0.00451603,

0.00209582, 0.00990557, 0.00824141, 0.00883857, 0.00131405,

0.00914533, 0.00529414, 0.00478936, 0.00629935, 0.00741034,

0.00245332, 0.00754501, 0.00562443, 0.00846798, 0.00123906,

0.00560041, 0.00912638, 0.00819918, 0.00701143, 0.00642578,

0.00836878, 0.0075009 , 0.00373653, 0.00853791, 0.0088217 ,

0.00839563, 0.00618332, 0.00700655, 0.00853968, 0.00761235,

0.0058152 , 0.00840255, 0.00495855, 0.00372589, 0.00424917,

0.00237047, 0.00431032, 0.00559366, 0.00346522]),

'mean_score_time': array([0.01735945, 0.00162292, 0.00529613, 0.01205955, 0.00816736,

0.00923171, 0.0154757 , 0.00650721, 0.00265293, 0.0048502 ,

0.00545001, 0.00509062, 0.00711589, 0.00536671, 0.01037369,

0.00866585, 0.01326685, 0.01125422, 0.01288624, 0.00948629,

0.01482744, 0.00865607, 0.01018271, 0.01208425, 0.01506248,

0.01079869, 0.01371765, 0.01002908, 0.01841173, 0.00860109,

0.01540732, 0.01379642, 0.01178975, 0.00874729, 0.00749412,

0.00877533, 0.00874476, 0.00577774, 0.01223459, 0.00874772,

0.00753121, 0.00685968, 0.01223269, 0.01178837, 0.01224051,

0.01297393, 0.00833902, 0.00671868, 0.0061482 , 0.09924459,

0.00381932, 0.00383253, 0.00759468, 0.00809612]),

'std_score_time': array([0.01432923, 0.00032953, 0.00350428, 0.00573327, 0.00609004,

0.00527208, 0.00423887, 0.00410708, 0.00111733, 0.00479482,

0.0048045 , 0.00457791, 0.00346177, 0.00499238, 0.00614688,

0.00942021, 0.00943238, 0.00819928, 0.00941064, 0.00975086,

0.01112444, 0.00688377, 0.0046237 , 0.00565126, 0.00679911,

0.00754281, 0.00609075, 0.00697605, 0.00328326, 0.00569467,

0.00887974, 0.01027803, 0.00832779, 0.0088359 , 0.00745771,

0.00801361, 0.00883952, 0.00594547, 0.00876469, 0.00909562,

0.00732416, 0.00717148, 0.00885378, 0.0082807 , 0.00703383,

0.00592259, 0.00837408, 0.0042987 , 0.00411752, 0.18525502,

0.00296578, 0.00363196, 0.00506498, 0.00393763]),

'param_criterion': masked_array(data=['gini', 'gini', 'gini', 'gini', 'gini', 'gini', 'gini',

'gini', 'gini', 'gini', 'gini', 'gini', 'gini', 'gini',

'gini', 'gini', 'gini', 'gini', 'gini', 'gini', 'gini',

'gini', 'gini', 'gini', 'gini', 'gini', 'gini',

'entropy', 'entropy', 'entropy', 'entropy', 'entropy',

'entropy', 'entropy', 'entropy', 'entropy', 'entropy',

'entropy', 'entropy', 'entropy', 'entropy', 'entropy',

'entropy', 'entropy', 'entropy', 'entropy', 'entropy',

'entropy', 'entropy', 'entropy', 'entropy', 'entropy',

'entropy', 'entropy'],

mask=[False, False, False, False, False, False, False, False,

False, False, False, False, False, False, False, False,

False, False, False, False, False, False, False, False,

False, False, False, False, False, False, False, False,

False, False, False, False, False, False, False, False,

False, False, False, False, False, False, False, False,

False, False, False, False, False, False],

fill_value=np.str_('?'),

dtype=object),

'param_max_depth': masked_array(data=[2, 2, 2, 2, 2, 2, 2, 2, 2, 3, 3, 3, 3, 3, 3, 3, 3, 3,

4, 4, 4, 4, 4, 4, 4, 4, 4, 2, 2, 2, 2, 2, 2, 2, 2, 2,

3, 3, 3, 3, 3, 3, 3, 3, 3, 4, 4, 4, 4, 4, 4, 4, 4, 4],

mask=[False, False, False, False, False, False, False, False,

False, False, False, False, False, False, False, False,

False, False, False, False, False, False, False, False,

False, False, False, False, False, False, False, False,

False, False, False, False, False, False, False, False,

False, False, False, False, False, False, False, False,

False, False, False, False, False, False],

fill_value=999999),

'param_min_samples_leaf': masked_array(data=[2, 4, 6, 8, 10, 12, 14, 16, 18, 2, 4, 6, 8, 10, 12, 14,

16, 18, 2, 4, 6, 8, 10, 12, 14, 16, 18, 2, 4, 6, 8, 10,

12, 14, 16, 18, 2, 4, 6, 8, 10, 12, 14, 16, 18, 2, 4,

6, 8, 10, 12, 14, 16, 18],

mask=[False, False, False, False, False, False, False, False,

False, False, False, False, False, False, False, False,

False, False, False, False, False, False, False, False,

False, False, False, False, False, False, False, False,

False, False, False, False, False, False, False, False,

False, False, False, False, False, False, False, False,

False, False, False, False, False, False],

fill_value=999999),

'params': [{'criterion': 'gini', 'max_depth': 2, 'min_samples_leaf': 2},

{'criterion': 'gini', 'max_depth': 2, 'min_samples_leaf': 4},

{'criterion': 'gini', 'max_depth': 2, 'min_samples_leaf': 6},

{'criterion': 'gini', 'max_depth': 2, 'min_samples_leaf': 8},

{'criterion': 'gini', 'max_depth': 2, 'min_samples_leaf': 10},

{'criterion': 'gini', 'max_depth': 2, 'min_samples_leaf': 12},

{'criterion': 'gini', 'max_depth': 2, 'min_samples_leaf': 14},

{'criterion': 'gini', 'max_depth': 2, 'min_samples_leaf': 16},

{'criterion': 'gini', 'max_depth': 2, 'min_samples_leaf': 18},

{'criterion': 'gini', 'max_depth': 3, 'min_samples_leaf': 2},

{'criterion': 'gini', 'max_depth': 3, 'min_samples_leaf': 4},

{'criterion': 'gini', 'max_depth': 3, 'min_samples_leaf': 6},

{'criterion': 'gini', 'max_depth': 3, 'min_samples_leaf': 8},

{'criterion': 'gini', 'max_depth': 3, 'min_samples_leaf': 10},

{'criterion': 'gini', 'max_depth': 3, 'min_samples_leaf': 12},

{'criterion': 'gini', 'max_depth': 3, 'min_samples_leaf': 14},

{'criterion': 'gini', 'max_depth': 3, 'min_samples_leaf': 16},

{'criterion': 'gini', 'max_depth': 3, 'min_samples_leaf': 18},

{'criterion': 'gini', 'max_depth': 4, 'min_samples_leaf': 2},

{'criterion': 'gini', 'max_depth': 4, 'min_samples_leaf': 4},

{'criterion': 'gini', 'max_depth': 4, 'min_samples_leaf': 6},

{'criterion': 'gini', 'max_depth': 4, 'min_samples_leaf': 8},

{'criterion': 'gini', 'max_depth': 4, 'min_samples_leaf': 10},

{'criterion': 'gini', 'max_depth': 4, 'min_samples_leaf': 12},

{'criterion': 'gini', 'max_depth': 4, 'min_samples_leaf': 14},

{'criterion': 'gini', 'max_depth': 4, 'min_samples_leaf': 16},

{'criterion': 'gini', 'max_depth': 4, 'min_samples_leaf': 18},

{'criterion': 'entropy', 'max_depth': 2, 'min_samples_leaf': 2},

{'criterion': 'entropy', 'max_depth': 2, 'min_samples_leaf': 4},

{'criterion': 'entropy', 'max_depth': 2, 'min_samples_leaf': 6},

{'criterion': 'entropy', 'max_depth': 2, 'min_samples_leaf': 8},

{'criterion': 'entropy', 'max_depth': 2, 'min_samples_leaf': 10},

{'criterion': 'entropy', 'max_depth': 2, 'min_samples_leaf': 12},

{'criterion': 'entropy', 'max_depth': 2, 'min_samples_leaf': 14},

{'criterion': 'entropy', 'max_depth': 2, 'min_samples_leaf': 16},

{'criterion': 'entropy', 'max_depth': 2, 'min_samples_leaf': 18},

{'criterion': 'entropy', 'max_depth': 3, 'min_samples_leaf': 2},

{'criterion': 'entropy', 'max_depth': 3, 'min_samples_leaf': 4},

{'criterion': 'entropy', 'max_depth': 3, 'min_samples_leaf': 6},

{'criterion': 'entropy', 'max_depth': 3, 'min_samples_leaf': 8},

{'criterion': 'entropy', 'max_depth': 3, 'min_samples_leaf': 10},

{'criterion': 'entropy', 'max_depth': 3, 'min_samples_leaf': 12},

{'criterion': 'entropy', 'max_depth': 3, 'min_samples_leaf': 14},

{'criterion': 'entropy', 'max_depth': 3, 'min_samples_leaf': 16},

{'criterion': 'entropy', 'max_depth': 3, 'min_samples_leaf': 18},

{'criterion': 'entropy', 'max_depth': 4, 'min_samples_leaf': 2},

{'criterion': 'entropy', 'max_depth': 4, 'min_samples_leaf': 4},

{'criterion': 'entropy', 'max_depth': 4, 'min_samples_leaf': 6},

{'criterion': 'entropy', 'max_depth': 4, 'min_samples_leaf': 8},

{'criterion': 'entropy', 'max_depth': 4, 'min_samples_leaf': 10},

{'criterion': 'entropy', 'max_depth': 4, 'min_samples_leaf': 12},

{'criterion': 'entropy', 'max_depth': 4, 'min_samples_leaf': 14},

{'criterion': 'entropy', 'max_depth': 4, 'min_samples_leaf': 16},

{'criterion': 'entropy', 'max_depth': 4, 'min_samples_leaf': 18}],

'split0_test_score': array([0.95652174, 0.95652174, 0.95652174, 0.95652174, 0.95652174,

0.95652174, 0.95652174, 0.95652174, 0.95652174, 1. ,

0.95652174, 0.95652174, 0.95652174, 0.95652174, 0.95652174,

0.95652174, 0.95652174, 0.95652174, 1. , 0.95652174,

0.95652174, 0.95652174, 0.95652174, 0.95652174, 0.95652174,

0.95652174, 0.95652174, 0.95652174, 0.95652174, 0.95652174,

0.95652174, 0.95652174, 0.95652174, 0.95652174, 0.95652174,

0.95652174, 1. , 0.95652174, 0.95652174, 0.95652174,

0.95652174, 0.95652174, 0.95652174, 0.95652174, 0.95652174,

1. , 0.95652174, 0.95652174, 0.95652174, 0.95652174,

0.95652174, 0.95652174, 0.95652174, 0.95652174]),

'split1_test_score': array([0.91304348, 0.91304348, 0.91304348, 0.91304348, 0.91304348,

0.91304348, 0.91304348, 0.91304348, 0.91304348, 0.91304348,

0.91304348, 0.91304348, 0.91304348, 0.91304348, 0.91304348,

0.91304348, 0.91304348, 0.91304348, 0.95652174, 0.91304348,

0.91304348, 0.91304348, 0.91304348, 0.91304348, 0.91304348,

0.91304348, 0.91304348, 0.91304348, 0.91304348, 0.91304348,

0.91304348, 0.91304348, 0.91304348, 0.91304348, 0.91304348,

0.91304348, 0.91304348, 0.91304348, 0.91304348, 0.91304348,

0.91304348, 0.91304348, 0.91304348, 0.91304348, 0.91304348,

0.91304348, 0.91304348, 0.91304348, 0.91304348, 0.91304348,

0.91304348, 0.91304348, 0.91304348, 0.91304348]),

'split2_test_score': array([1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1.,

1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1.,

1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1., 1.,

1., 1., 1.]),

'split3_test_score': array([0.90909091, 0.90909091, 0.90909091, 0.90909091, 0.90909091,

0.90909091, 0.90909091, 0.90909091, 0.90909091, 0.95454545,

0.90909091, 0.90909091, 0.90909091, 0.90909091, 0.90909091,

0.90909091, 0.90909091, 0.90909091, 0.95454545, 0.90909091,

0.90909091, 0.90909091, 0.90909091, 0.90909091, 0.90909091,

0.90909091, 0.90909091, 0.90909091, 0.90909091, 0.90909091,

0.90909091, 0.90909091, 0.90909091, 0.90909091, 0.90909091,

0.90909091, 0.95454545, 0.90909091, 0.90909091, 0.90909091,

0.90909091, 0.90909091, 0.90909091, 0.90909091, 0.90909091,

0.95454545, 0.90909091, 0.90909091, 0.90909091, 0.90909091,

0.90909091, 0.90909091, 0.90909091, 0.90909091]),

'split4_test_score': array([0.95454545, 0.95454545, 0.95454545, 0.95454545, 0.95454545,

0.95454545, 0.95454545, 0.95454545, 0.95454545, 0.95454545,

0.95454545, 0.95454545, 0.95454545, 0.95454545, 0.95454545,

0.95454545, 0.95454545, 0.95454545, 0.95454545, 0.95454545,

0.95454545, 0.95454545, 0.95454545, 0.95454545, 0.95454545,

0.95454545, 0.95454545, 0.95454545, 0.95454545, 0.95454545,

0.95454545, 0.95454545, 0.95454545, 0.95454545, 0.95454545,

0.95454545, 0.95454545, 0.95454545, 0.95454545, 0.95454545,

0.95454545, 0.95454545, 0.95454545, 0.95454545, 0.95454545,

0.95454545, 0.95454545, 0.95454545, 0.95454545, 0.95454545,

0.95454545, 0.95454545, 0.95454545, 0.95454545]),

'mean_test_score': array([0.94664032, 0.94664032, 0.94664032, 0.94664032, 0.94664032,

0.94664032, 0.94664032, 0.94664032, 0.94664032, 0.96442688,

0.94664032, 0.94664032, 0.94664032, 0.94664032, 0.94664032,

0.94664032, 0.94664032, 0.94664032, 0.97312253, 0.94664032,

0.94664032, 0.94664032, 0.94664032, 0.94664032, 0.94664032,

0.94664032, 0.94664032, 0.94664032, 0.94664032, 0.94664032,

0.94664032, 0.94664032, 0.94664032, 0.94664032, 0.94664032,

0.94664032, 0.96442688, 0.94664032, 0.94664032, 0.94664032,

0.94664032, 0.94664032, 0.94664032, 0.94664032, 0.94664032,

0.96442688, 0.94664032, 0.94664032, 0.94664032, 0.94664032,

0.94664032, 0.94664032, 0.94664032, 0.94664032]),

'std_test_score': array([0.03330494, 0.03330494, 0.03330494, 0.03330494, 0.03330494,

0.03330494, 0.03330494, 0.03330494, 0.03330494, 0.03276105,

0.03330494, 0.03330494, 0.03330494, 0.03330494, 0.03330494,

0.03330494, 0.03330494, 0.03330494, 0.02195722, 0.03330494,

0.03330494, 0.03330494, 0.03330494, 0.03330494, 0.03330494,

0.03330494, 0.03330494, 0.03330494, 0.03330494, 0.03330494,

0.03330494, 0.03330494, 0.03330494, 0.03330494, 0.03330494,

0.03330494, 0.03276105, 0.03330494, 0.03330494, 0.03330494,

0.03330494, 0.03330494, 0.03330494, 0.03330494, 0.03330494,

0.03276105, 0.03330494, 0.03330494, 0.03330494, 0.03330494,

0.03330494, 0.03330494, 0.03330494, 0.03330494]),

'rank_test_score': array([5, 5, 5, 5, 5, 5, 5, 5, 5, 2, 5, 5, 5, 5, 5, 5, 5, 5, 1, 5, 5, 5,

5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 5, 2, 5, 5, 5, 5, 5, 5, 5,

5, 2, 5, 5, 5, 5, 5, 5, 5, 5], dtype=int32)}It is easier to work with if we use a DataFrame:

dt_5cv_df = pd.DataFrame(dt_opt.cv_results_)First let’s inspect its shape:

dt_5cv_df.shape(54, 16)Notice that it has one row for each of the np.int64(54) combinations we computed above).

It has a lot of columns, we can use the head to see them

dt_5cv_df.head()the

fit_timeis the time it takes for the.fitmethod to run for a single setting of hyperparameter values. it computes the mean and std of these over the Kfold cross validation (so here, over 5 separate times)the

score_timeis the time to make predictions. it computes the mean and std of these over the Kfold cross validation (so here, over 5 separate times)there is one

param_column for each key in the parameter grid (<function dict.keys>)the

paramscolumn contains a dictionary of all of the parameters used for that rowthe

test_scores are better termed the validation accuracy because is truly the score for the validation data it is the “test” set from the splits in the cross validation loop. It records the value for each fold, the mean and the std for them.rank_test_scoreis the rank for that hyperparameter setting’smean_test_score

Since we used a classifier here, the score is accuracy if it was regression it would be the score, if Kmeans it would be the opposite of the Kmeans objective.

We can also plot the dta and look at the performance.

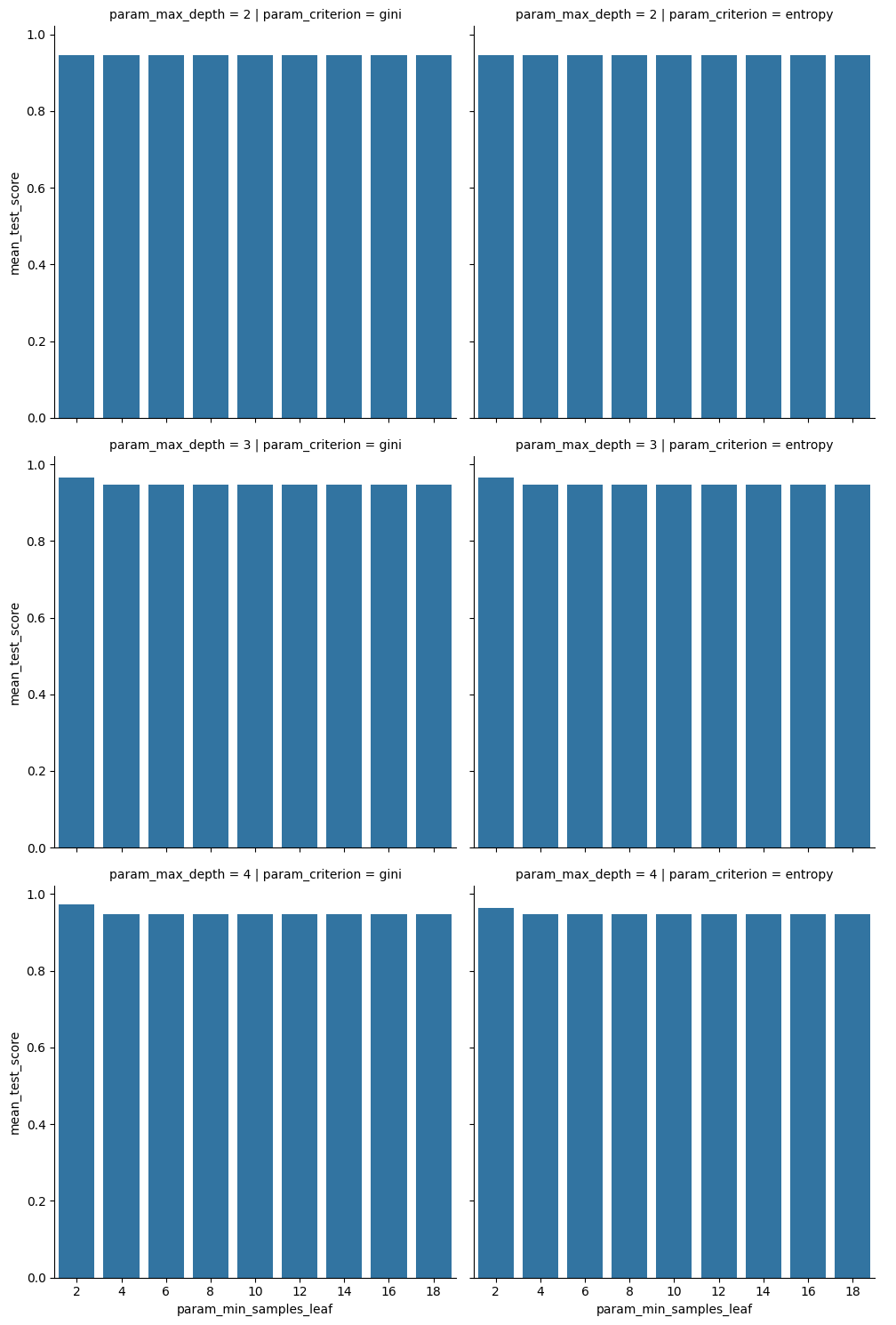

sns.catplot(data=dt_5cv_df,x='param_min_samples_leaf',y='mean_test_score',

col='param_criterion', row= 'param_max_depth', kind='bar',)<seaborn.axisgrid.FacetGrid at 0x7faad08edaf0>

this makes it clear that none of these stick out much in terms of performance.

The best model here is not much better than the others, but for less simple tasks there are more things to choose from.

Impact of CV parameters¶

Let’s fit again with cv=10 to see with 10-fold cross validation.

dt_opt10 = GridSearchCV(dt,params_dt,cv=10)

dt_opt10.fit(iris_X_train,iris_y_train)and get the dataframe for the results

dt_10cv_df = pd.DataFrame(dt_opt10.cv_results_)We can stack the columns we want from the two results together with a new indicator column cv:

plot_cols = ['param_min_samples_leaf','std_test_score','mean_test_score',

'param_criterion','param_max_depth','cv']

dt_10cv_df['cv'] = 10

dt_5cv_df['cv'] = 5

dt_cv_df = pd.concat([dt_5cv_df[plot_cols],dt_10cv_df[plot_cols]])

dt_cv_df.head()this can be used to plot.

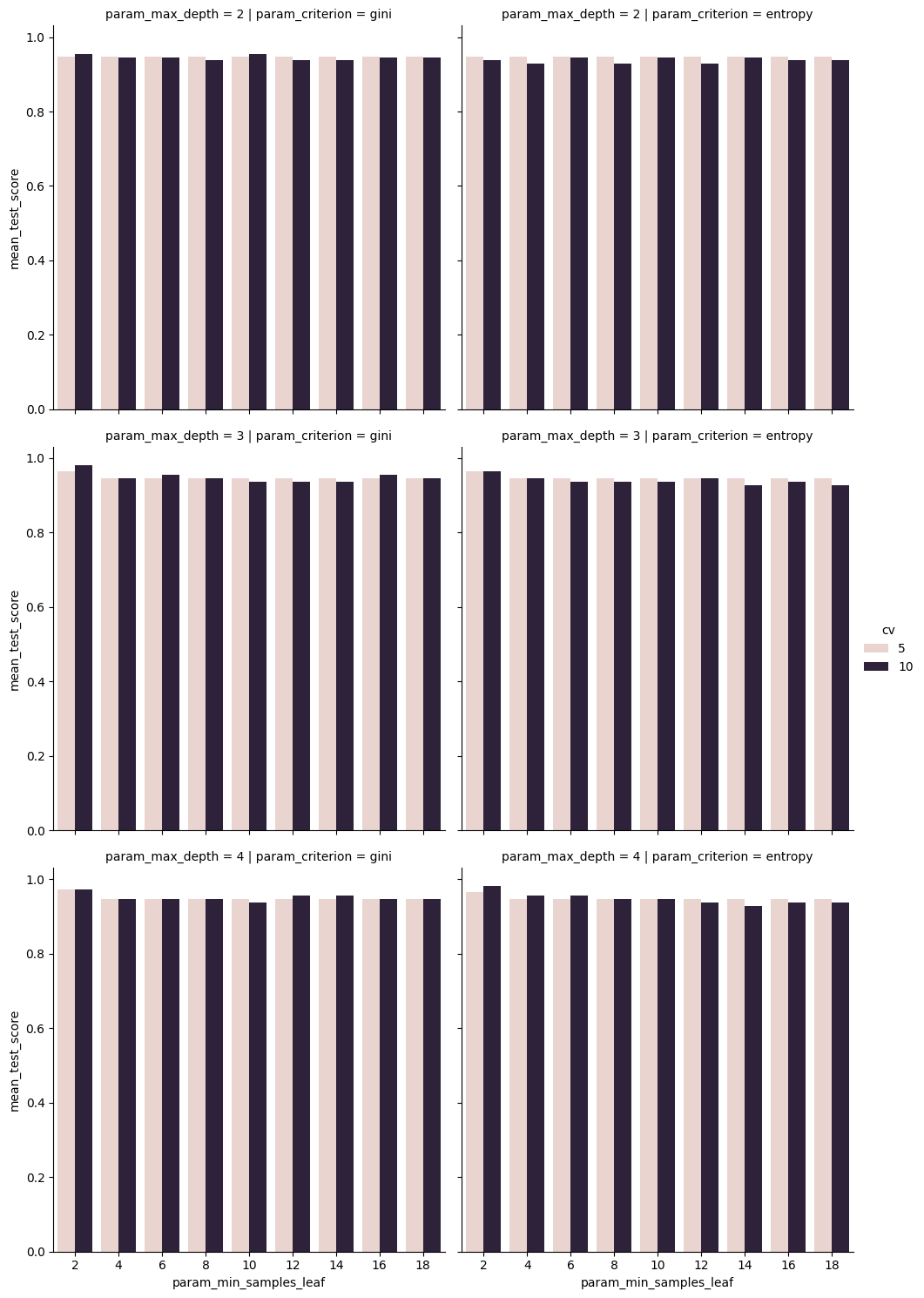

sns.catplot(data=dt_cv_df,x='param_min_samples_leaf',y='mean_test_score',

col='param_criterion', row= 'param_max_depth', kind='bar',

hue = 'cv')<seaborn.axisgrid.FacetGrid at 0x7faacfa001a0>

we see that the mean scores are not very different, but that 10 is a little higher in some cases. This makes sense, it has more data to learn from, so it found something that applied better, on average, to the test set.

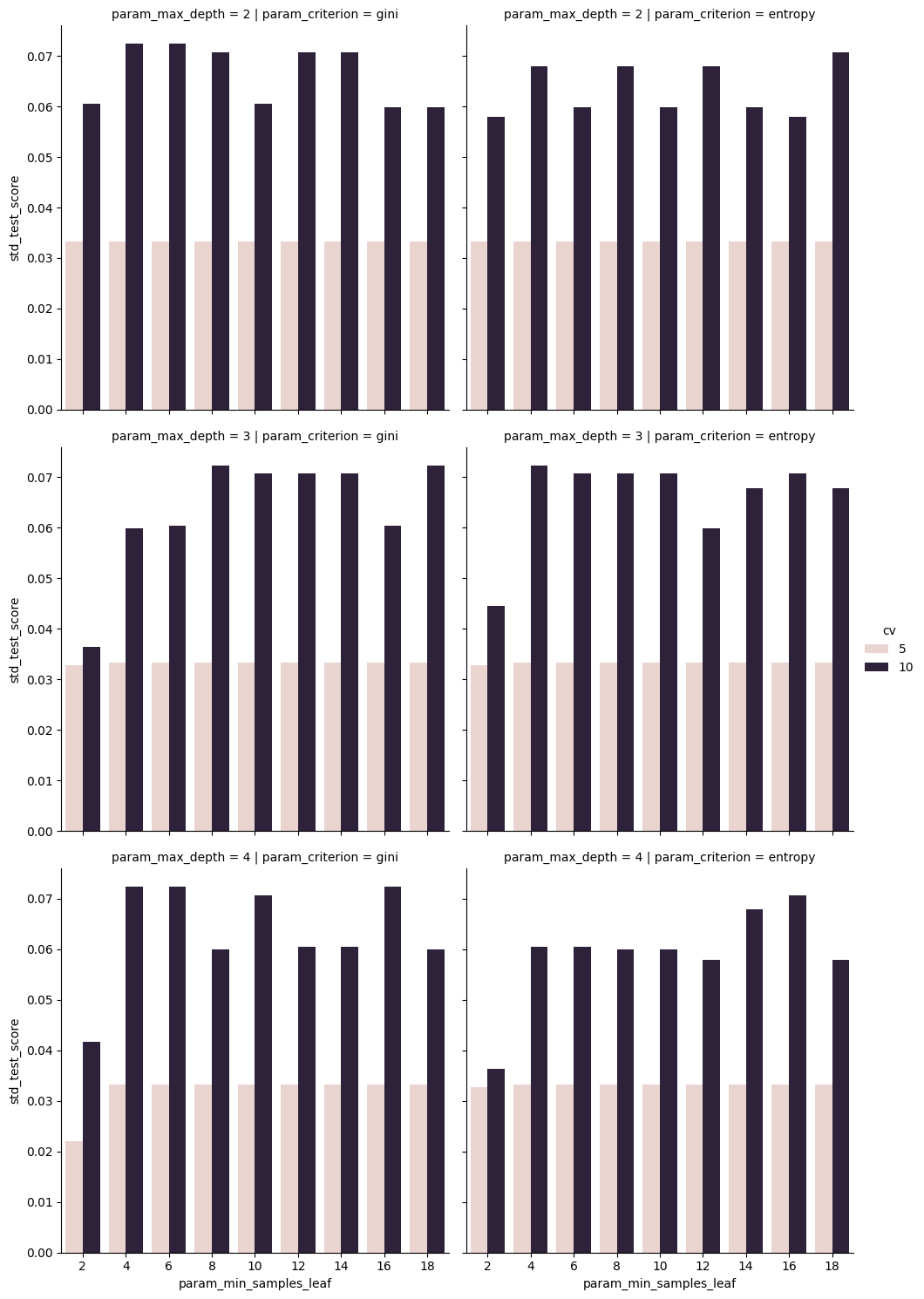

sns.catplot(data=dt_cv_df,x='param_min_samples_leaf',y='std_test_score',

col='param_criterion', row= 'param_max_depth', kind='bar',

hue = 'cv')<seaborn.axisgrid.FacetGrid at 0x7faad0760080>

However here we see that the variabilty in those scores is much higher, so maybe the 5 is better.

There were a really small number of samples used to compute each of those scores so some of them will vary a lot more.

.75*150112.5112/522.4We can compare to see if it finds the same model as best:

dt_opt.best_params_{'criterion': 'gini', 'max_depth': 4, 'min_samples_leaf': 2}dt_opt10.best_params_{'criterion': 'gini', 'max_depth': 3, 'min_samples_leaf': 2}In some cases they will and others they will not.

dt_opt.score(iris_X_test,iris_y_test)0.9473684210526315dt_opt10.score(iris_X_test,iris_y_test)0.9736842105263158In some cases they will find the same model and score the same, but it other time they will not.

The takeaway is that the cross validation parameters impact our ability to measure the score and possibly how close that cross validation mean score will match the true test score. Mostly it will change the variability in the estimate of the score. It does not change necessarily which model is best, that is up to the data iteself (the original test/train split would impact this).

Other searches¶

from sklearn import model_selection

from sklearn.model_selection import LeaveOneOutrand_opt = model_selection.RandomizedSearchCV(dt,params_dt,)

rand_opt.fit(iris_X_train, iris_y_train)rand_opt.score(iris_X_test,iris_y_test)0.9736842105263158It might find the same solution, but it also might not. If you do some and see that the parameters overall do not impact the scores much, then you can trust whichever one, or consider other criteria to choose the best model to use.

Choosing a model to use¶

The Grid search finds the hyperparameter values that result in the best mean score. But what if more than one does that?

dt_5cv_df['rank_test_score'].value_counts()rank_test_score

5 50

2 3

1 1

Name: count, dtype: int64Lets look at the ones sharing a rank of 1:

dt_5cv_df[dt_5cv_df['rank_test_score']==1]We can compare on other aspects, like the time. In particular a lower or more consistent score_time could impact how expensive it is to run your model in production.

dt_5cv_df[['mean_fit_time', 'std_fit_time', 'mean_score_time', 'std_score_time']].mean()mean_fit_time 0.011578

std_fit_time 0.005592

mean_score_time 0.011176

std_score_time 0.009834

dtype: float64dt_5cv_df[['mean_fit_time', 'std_fit_time', 'mean_score_time', 'std_score_time']].head(3)