Class 5: Accessing Data, continued¶

Today’s agenda:

warm up/ review

announcements

working with dataframes

the power of functions as objects

(maybe) exploratory data analysis

Try it out –>

Read the tables off of the syllabus course map page with read_html and make a list of the shapes of all of the tables on the page. Save the output to a variable and paste the value of that variable as your answer to the question.

import pandas as pd

[df.shape for df in pd.read_html('https://rhodyprog4ds.github.io/BrownFall20/syllabus/course_map.html')]

[(14, 3), (15, 5), (15, 15), (15, 6)]

Announcements¶

annotated notes are up

beginning portfolio prompts and instructions are up

Assignment due Sunday,

office hours will remain Fridays

TA office hours posted.

More Pandas¶

We’ll go back to the SAFI dataset from Wednesday.

safi_df = pd.read_csv('https://raw.githubusercontent.com/brownsarahm/python-socialsci-files/master/data/SAFI_clean.csv')

We downloaded the data into memory, but we can also write it to disk.

safi_df.to_csv('safi_clean.csv')

It will go to the same folder as the notebook, but we can also use a relative path. If we make a data folder in the folder where we’ve saved the notebook, we can write the file there instead.

safi_df.to_csv('data/safi_clean.csv')

Now we can read it in using the same path

safi_df2= pd.read_csv('data/safi_clean.csv')

Note that now it has an extra column

safi_df2.head(2)

| Unnamed: 0 | key_ID | village | interview_date | no_membrs | years_liv | respondent_wall_type | rooms | memb_assoc | affect_conflicts | liv_count | items_owned | no_meals | months_lack_food | instanceID | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0 | 1 | God | 2016-11-17T00:00:00Z | 3 | 4 | muddaub | 1 | NaN | NaN | 1 | bicycle;television;solar_panel;table | 2 | Jan | uuid:ec241f2c-0609-46ed-b5e8-fe575f6cefef |

| 1 | 1 | 1 | God | 2016-11-17T00:00:00Z | 7 | 9 | muddaub | 1 | yes | once | 3 | cow_cart;bicycle;radio;cow_plough;solar_panel;... | 2 | Jan;Sept;Oct;Nov;Dec | uuid:099de9c9-3e5e-427b-8452-26250e840d6e |

safi_df.head(2)

| key_ID | village | interview_date | no_membrs | years_liv | respondent_wall_type | rooms | memb_assoc | affect_conflicts | liv_count | items_owned | no_meals | months_lack_food | instanceID | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | God | 2016-11-17T00:00:00Z | 3 | 4 | muddaub | 1 | NaN | NaN | 1 | bicycle;television;solar_panel;table | 2 | Jan | uuid:ec241f2c-0609-46ed-b5e8-fe575f6cefef |

| 1 | 1 | God | 2016-11-17T00:00:00Z | 7 | 9 | muddaub | 1 | yes | once | 3 | cow_cart;bicycle;radio;cow_plough;solar_panel;... | 2 | Jan;Sept;Oct;Nov;Dec | uuid:099de9c9-3e5e-427b-8452-26250e840d6e |

We can prevent this by writing it out with the index parameter set to False

safi_df.to_csv('data/safi_clean.csv',index=False)

Now when we read it in, there’s no extra column.

safi_df3 = pd.read_csv('data/safi_clean.csv')

safi_df3.head(3)

| key_ID | village | interview_date | no_membrs | years_liv | respondent_wall_type | rooms | memb_assoc | affect_conflicts | liv_count | items_owned | no_meals | months_lack_food | instanceID | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | God | 2016-11-17T00:00:00Z | 3 | 4 | muddaub | 1 | NaN | NaN | 1 | bicycle;television;solar_panel;table | 2 | Jan | uuid:ec241f2c-0609-46ed-b5e8-fe575f6cefef |

| 1 | 1 | God | 2016-11-17T00:00:00Z | 7 | 9 | muddaub | 1 | yes | once | 3 | cow_cart;bicycle;radio;cow_plough;solar_panel;... | 2 | Jan;Sept;Oct;Nov;Dec | uuid:099de9c9-3e5e-427b-8452-26250e840d6e |

| 2 | 3 | God | 2016-11-17T00:00:00Z | 10 | 15 | burntbricks | 1 | NaN | NaN | 1 | solar_torch | 2 | Jan;Feb;Mar;Oct;Nov;Dec | uuid:193d7daf-9582-409b-bf09-027dd36f9007 |

Recall, we indexed a column with the name in square brackets

safi_df['village']

0 God

1 God

2 God

3 God

4 God

...

126 Ruaca

127 Ruaca

128 Ruaca

129 Chirodzo

130 Chirodzo

Name: village, Length: 131, dtype: object

To index rows, we can use loc

safi_df.loc[3]

key_ID 4

village God

interview_date 2016-11-17T00:00:00Z

no_membrs 7

years_liv 6

respondent_wall_type burntbricks

rooms 1

memb_assoc NaN

affect_conflicts NaN

liv_count 2

items_owned bicycle;radio;cow_plough;solar_panel;mobile_phone

no_meals 2

months_lack_food Sept;Oct;Nov;Dec

instanceID uuid:148d1105-778a-4755-aa71-281eadd4a973

Name: 3, dtype: object

To select a range, use :

safi_df.loc[3:5]

| key_ID | village | interview_date | no_membrs | years_liv | respondent_wall_type | rooms | memb_assoc | affect_conflicts | liv_count | items_owned | no_meals | months_lack_food | instanceID | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 3 | 4 | God | 2016-11-17T00:00:00Z | 7 | 6 | burntbricks | 1 | NaN | NaN | 2 | bicycle;radio;cow_plough;solar_panel;mobile_phone | 2 | Sept;Oct;Nov;Dec | uuid:148d1105-778a-4755-aa71-281eadd4a973 |

| 4 | 5 | God | 2016-11-17T00:00:00Z | 7 | 40 | burntbricks | 1 | NaN | NaN | 4 | motorcyle;radio;cow_plough;mobile_phone | 2 | Aug;Sept;Oct;Nov | uuid:2c867811-9696-4966-9866-f35c3e97d02d |

| 5 | 6 | God | 2016-11-17T00:00:00Z | 3 | 3 | muddaub | 1 | NaN | NaN | 1 | NaN | 2 | Aug;Sept;Oct | uuid:daa56c91-c8e3-44c3-a663-af6a49a2ca70 |

You only have to have a number on one side of the colon, it will go from the beginnig up to that number like this:

safi_df.loc[:4]

| key_ID | village | interview_date | no_membrs | years_liv | respondent_wall_type | rooms | memb_assoc | affect_conflicts | liv_count | items_owned | no_meals | months_lack_food | instanceID | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | God | 2016-11-17T00:00:00Z | 3 | 4 | muddaub | 1 | NaN | NaN | 1 | bicycle;television;solar_panel;table | 2 | Jan | uuid:ec241f2c-0609-46ed-b5e8-fe575f6cefef |

| 1 | 1 | God | 2016-11-17T00:00:00Z | 7 | 9 | muddaub | 1 | yes | once | 3 | cow_cart;bicycle;radio;cow_plough;solar_panel;... | 2 | Jan;Sept;Oct;Nov;Dec | uuid:099de9c9-3e5e-427b-8452-26250e840d6e |

| 2 | 3 | God | 2016-11-17T00:00:00Z | 10 | 15 | burntbricks | 1 | NaN | NaN | 1 | solar_torch | 2 | Jan;Feb;Mar;Oct;Nov;Dec | uuid:193d7daf-9582-409b-bf09-027dd36f9007 |

| 3 | 4 | God | 2016-11-17T00:00:00Z | 7 | 6 | burntbricks | 1 | NaN | NaN | 2 | bicycle;radio;cow_plough;solar_panel;mobile_phone | 2 | Sept;Oct;Nov;Dec | uuid:148d1105-778a-4755-aa71-281eadd4a973 |

| 4 | 5 | God | 2016-11-17T00:00:00Z | 7 | 40 | burntbricks | 1 | NaN | NaN | 4 | motorcyle;radio;cow_plough;mobile_phone | 2 | Aug;Sept;Oct;Nov | uuid:2c867811-9696-4966-9866-f35c3e97d02d |

With two :: we can also set an increment

safi_df.loc[::5]

| key_ID | village | interview_date | no_membrs | years_liv | respondent_wall_type | rooms | memb_assoc | affect_conflicts | liv_count | items_owned | no_meals | months_lack_food | instanceID | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | God | 2016-11-17T00:00:00Z | 3 | 4 | muddaub | 1 | NaN | NaN | 1 | bicycle;television;solar_panel;table | 2 | Jan | uuid:ec241f2c-0609-46ed-b5e8-fe575f6cefef |

| 5 | 6 | God | 2016-11-17T00:00:00Z | 3 | 3 | muddaub | 1 | NaN | NaN | 1 | NaN | 2 | Aug;Sept;Oct | uuid:daa56c91-c8e3-44c3-a663-af6a49a2ca70 |

| 10 | 11 | God | 2016-11-21T00:00:00Z | 6 | 20 | sunbricks | 1 | NaN | NaN | 2 | radio;cow_plough | 2 | Oct;Nov | uuid:d29b44e3-3348-4afc-aa4d-9eb34c89d483 |

| 15 | 16 | God | 2016-11-24T00:00:00Z | 6 | 47 | muddaub | 1 | NaN | NaN | 4 | radio;cow_plough;solar_panel;solar_torch | 3 | Jan;Feb | uuid:d17db52f-4b87-4768-b534-ea8f9704c565 |

| 20 | 21 | God | 2016-11-21T00:00:00Z | 8 | 20 | burntbricks | 1 | no | never | 3 | NaN | 2 | Jan;Feb;Mar;Oct;Nov;Dec | uuid:6570a7d0-6a0b-452c-aa2e-922500e35749 |

| 25 | 26 | Ruaca | 2016-11-21T00:00:00Z | 3 | 20 | burntbricks | 2 | no | never | 2 | radio;cow_plough;table;mobile_phone | 2 | none | uuid:1c54ee24-22c4-4ee9-b1ad-42d483c08e2e |

| 30 | 31 | Ruaca | 2016-11-21T00:00:00Z | 3 | 2 | muddaub | 1 | NaN | NaN | 1 | NaN | 3 | none | uuid:cb06eb49-dd39-4150-8bbe-a599e074afe8 |

| 35 | 36 | Chirodzo | 2016-11-17T00:00:00Z | 6 | 23 | sunbricks | 1 | yes | once | 3 | cow_cart;bicycle;radio;cow_plough;solar_panel;... | 3 | none | uuid:c90eade0-1148-4a12-8c0e-6387a36f45b1 |

| 40 | 41 | God | 2016-11-17T00:00:00Z | 7 | 22 | muddaub | 1 | NaN | NaN | 2 | motorcyle;bicycle;radio;cow_plough;table | 3 | Oct;Nov | uuid:b3ba34d8-eea1-453d-bc73-c141bcbbc5e5 |

| 45 | 46 | Chirodzo | 2016-11-17T00:00:00Z | 10 | 42 | burntbricks | 2 | no | once | 2 | motorcyle;computer;television;sterio;solar_pan... | 2 | Sept;Oct;Nov | uuid:35f297e0-aa5d-4149-9b7b-4965004cfc37 |

| 50 | 51 | Chirodzo | 2016-11-16T00:00:00Z | 5 | 30 | muddaub | 1 | NaN | NaN | 1 | radio | 3 | Oct;Nov | uuid:18ac8e77-bdaf-47ab-85a2-e4c947c9d3ce |

| 55 | 56 | Chirodzo | 2016-11-16T00:00:00Z | 12 | 23 | burntbricks | 2 | yes | never | 2 | motorcyle;bicycle;mobile_phone | 3 | none | uuid:973c4ac6-f887-48e7-aeaf-4476f2cfab76 |

| 60 | 61 | Chirodzo | 2016-11-16T00:00:00Z | 10 | 14 | muddaub | 1 | yes | more_once | 3 | cow_cart;motorcyle;bicycle;television;radio;co... | 3 | Jan;Feb;Dec | uuid:2401cf50-8859-44d9-bd14-1bf9128766f2 |

| 65 | 66 | Chirodzo | 2016-11-16T00:00:00Z | 10 | 37 | burntbricks | 3 | yes | frequently | 4 | cow_cart;motorcyle;bicycle;television;radio;co... | 3 | none | uuid:a457eab8-971b-4417-a971-2e55b8702816 |

| 70 | 71 | Ruaca | 2016-11-18T00:00:00Z | 6 | 14 | burntbricks | 1 | yes | more_once | 3 | radio;cow_plough;mobile_phone | 2 | Aug;Sept;Oct;Nov | uuid:761f9c49-ec93-4932-ba4c-cc7b78dfcef1 |

| 75 | 155 | God | 2016-11-24T00:00:00Z | 4 | 4 | burntbricks | 1 | NaN | NaN | 1 | electricity | 2 | Jan;Sept;Oct;Nov;Dec | uuid:77b3021b-a9d6-4276-aaeb-5bfcfd413852 |

| 80 | 182 | God | 2016-11-25T00:00:00Z | 7 | 21 | muddaub | 3 | no | more_once | 2 | solar_panel | 3 | Jan;Feb;Nov;Dec | uuid:394033e8-a6e2-4e39-bfac-458753a1ed78 |

| 85 | 197 | God | 2016-11-28T00:00:00Z | 5 | 19 | burntbricks | 2 | no | more_once | 3 | bicycle;television;radio;cow_plough;solar_torc... | 2 | Nov | uuid:85c99fd2-775f-40c9-8654-68223f59d091 |

| 90 | 73 | Ruaca | 2017-04-26T00:00:00Z | 7 | 9 | burntbricks | 2 | yes | more_once | 3 | cow_cart;motorcyle;bicycle;television;radio;co... | 3 | Jan;Sept;Oct | uuid:ac3da862-9e6c-4962-94b6-f4c31624f207 |

| 95 | 101 | God | 2017-04-27T00:00:00Z | 3 | 4 | muddaub | 1 | no | never | 1 | bicycle;solar_torch | 3 | Sept;Oct;Nov | uuid:3c174acd-e431-4523-9ad6-eb14cddca805 |

| 100 | 104 | Ruaca | 2017-04-28T00:00:00Z | 14 | 52 | sunbricks | 1 | yes | never | 4 | cow_cart;bicycle;cow_plough | 3 | Jan;Feb;Dec | uuid:bb2bb365-7d7d-4fe9-9353-b21269676119 |

| 105 | 113 | Ruaca | 2017-05-03T00:00:00Z | 11 | 26 | burntbricks | 3 | no | never | 4 | cow_cart;motorcyle;bicycle;radio;cow_plough;so... | 3 | none | uuid:01210861-aba1-4268-98d0-0260e05f5155 |

| 110 | 108 | God | 2017-05-11T00:00:00Z | 15 | 22 | burntbricks | 2 | no | never | 4 | cow_cart;bicycle;radio;cow_plough;solar_panel;... | 3 | Aug;Sept;Oct;Nov | uuid:e4f4d6ba-e698-45a5-947f-ba6da88cc22b |

| 115 | 150 | Ruaca | 2017-05-18T00:00:00Z | 7 | 8 | muddaub | 1 | no | never | 1 | mobile_phone | 3 | Sept;Oct;Nov | uuid:92613d0d-e7b1-4d62-8ea4-451d7cd0a982 |

| 120 | 167 | Ruaca | 2017-06-03T00:00:00Z | 8 | 24 | muddaub | 1 | no | never | 3 | motorcyle;radio;cow_plough;solar_panel;solar_t... | 2 | Jan;Nov;Dec | uuid:a9d1a013-043b-475d-a71b-77ed80abe970 |

| 125 | 192 | Chirodzo | 2017-06-03T00:00:00Z | 9 | 20 | burntbricks | 1 | no | once | 1 | bicycle;television;radio;sterio;solar_panel;so... | 3 | Jan;Nov;Dec | uuid:f94409a6-e461-4e4c-a6fb-0072d3d58b00 |

| 130 | 200 | Chirodzo | 2017-06-04T00:00:00Z | 8 | 20 | burntbricks | 2 | NaN | NaN | 3 | radio;cow_plough;solar_panel;solar_torch;table... | 3 | Oct;Nov | uuid:aa77a0d7-7142-41c8-b494-483a5b68d8a7 |

These can be combined to index a subset at an increment.

We can index columns in two ways, as we did on Wednesday

safi_df['village'].head(2)

0 God

1 God

Name: village, dtype: object

Or using a .

safi_df.village.head(2)

0 God

1 God

Name: village, dtype: object

We can select multiple columns, using a list of column names. We can define the list inline.

safi_df[['village','no_membrs','years_liv']].head(2)

| village | no_membrs | years_liv | |

|---|---|---|---|

| 0 | God | 3 | 4 |

| 1 | God | 7 | 9 |

or in a separate variable

columns_of_interest = ['village','no_membrs','years_liv']

safi_df[columns_of_interest].head(2)

| village | no_membrs | years_liv | |

|---|---|---|---|

| 0 | God | 3 | 4 |

| 1 | God | 7 | 9 |

Functions are objects¶

syllabus_df_list = pd.read_html('https://rhodyprog4ds.github.io/BrownFall20/syllabus/course_map.html')

And we can put them in a dictionary. lambda functions are special functions defined in a single line.

greetingl = lambda name: 'hello ' + name

greetingl('sarah')

'hello sarah'

is the same as

def greetingf(name):

return 'hello ' + name

greetingf('sarah')

'hello sarah'

So, we can define a function in a dictionary like this:

view_rows = {0: lambda df: print(df.head()),

1: lambda df: print(df.tail())}

The len function works on all iterables

for df in syllabus_df_list:

num_row = len(df)

view_rows[num_row%2](df)

Unnamed: 0_level_0 topics \

week Unnamed: 1_level_1

0 1 [admin, python review]

1 2 Loading data, Python review

2 3 Exploratory Data Analysis

3 4 Data Cleaning

4 5 Databases, Merging DataFrames

skills

Unnamed: 2_level_1

0 process

1 [access, prepare, summarize]

2 [summarize, visualize]

3 [prepare, summarize, visualize]

4 [access, construct, summarize]

Unnamed: 0_level_0 skill \

keyword Unnamed: 1_level_1

10 evaluate Evaluate model performance

11 optimize Optimize model parameters

12 compare compare models

13 unstructured model unstructured data

14 workflow use industry standard data science tools and w...

Level 1 \

Unnamed: 2_level_1

10 Explain basic performance metrics for differen...

11 Identify when model parameters need to be opti...

12 Qualitatively compare model classes

13 Identify options for representing text data an...

14 Solve well strucutred problems with a single t...

Level 2 \

Unnamed: 3_level_1

10 Apply basic model evaluation metrics to a held...

11 Manually optimize basic model parameters such ...

12 Compare model classes in specific terms and fi...

13 Apply at least one representation to transform...

14 Solve semi-strucutred, completely specified pr...

Level 3

Unnamed: 4_level_1

10 Evaluate a model with multiple metrics and cro...

11 Select optimal parameters based of mutiple qua...

12 Evaluate tradeoffs between different model com...

13 apply multiple representations and compare and...

14 Scope, choose an appropriate tool pipeline and...

Unnamed: 0_level_0 A1 A2 \

keyword Unnamed: 1_level_1 Unnamed: 2_level_1

10 evaluate 0 0

11 optimize 0 0

12 compare 0 0

13 unstructured 0 0

14 workflow 0 0

A3 A4 A5 \

Unnamed: 3_level_1 Unnamed: 4_level_1 Unnamed: 5_level_1

10 0 0 0

11 0 0 0

12 0 0 0

13 0 0 0

14 0 0 0

A6 A7 A8 \

Unnamed: 6_level_1 Unnamed: 7_level_1 Unnamed: 8_level_1

10 0 0 0

11 0 0 0

12 0 0 0

13 0 0 0

14 0 0 0

A9 A10 A11 \

Unnamed: 9_level_1 Unnamed: 10_level_1 Unnamed: 11_level_1

10 0 1 1

11 0 1 1

12 0 0 1

13 0 0 0

14 0 1 1

A12 A13 # Assignments

Unnamed: 12_level_1 Unnamed: 13_level_1 Unnamed: 14_level_1

10 0 0 2

11 0 0 2

12 0 1 2

13 1 1 2

14 1 1 4

Unnamed: 0_level_0 Level 3 \

keyword Unnamed: 1_level_1

10 evaluate Evaluate a model with multiple metrics and cro...

11 optimize Select optimal parameters based of mutiple qua...

12 compare Evaluate tradeoffs between different model com...

13 unstructured apply multiple representations and compare and...

14 workflow Scope, choose an appropriate tool pipeline and...

P1 P2 P3 P4

Unnamed: 2_level_1 Unnamed: 3_level_1 Unnamed: 4_level_1 Unnamed: 5_level_1

10 0 1 1 0

11 0 0 1 1

12 0 0 1 1

13 0 0 1 1

14 0 0 1 1

The beginning of Exploratory Data Analysis¶

Pandas will give us descriptive statistics

safi_df.describe()

| key_ID | no_membrs | years_liv | rooms | liv_count | no_meals | |

|---|---|---|---|---|---|---|

| count | 131.000000 | 131.00000 | 131.000000 | 131.000000 | 131.000000 | 131.000000 |

| mean | 85.473282 | 7.19084 | 23.053435 | 1.740458 | 2.366412 | 2.603053 |

| std | 63.151628 | 3.17227 | 16.913041 | 1.092547 | 1.082775 | 0.491143 |

| min | 1.000000 | 2.00000 | 1.000000 | 1.000000 | 1.000000 | 2.000000 |

| 25% | 32.500000 | 5.00000 | 12.000000 | 1.000000 | 1.000000 | 2.000000 |

| 50% | 66.000000 | 7.00000 | 20.000000 | 1.000000 | 2.000000 | 3.000000 |

| 75% | 138.000000 | 9.00000 | 27.500000 | 2.000000 | 3.000000 | 3.000000 |

| max | 202.000000 | 19.00000 | 96.000000 | 8.000000 | 5.000000 | 3.000000 |

The statistics of the key_ID column don’t make a lot of sense. We can avoid that by making it the index

safi_df.head()

| key_ID | village | interview_date | no_membrs | years_liv | respondent_wall_type | rooms | memb_assoc | affect_conflicts | liv_count | items_owned | no_meals | months_lack_food | instanceID | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | God | 2016-11-17T00:00:00Z | 3 | 4 | muddaub | 1 | NaN | NaN | 1 | bicycle;television;solar_panel;table | 2 | Jan | uuid:ec241f2c-0609-46ed-b5e8-fe575f6cefef |

| 1 | 1 | God | 2016-11-17T00:00:00Z | 7 | 9 | muddaub | 1 | yes | once | 3 | cow_cart;bicycle;radio;cow_plough;solar_panel;... | 2 | Jan;Sept;Oct;Nov;Dec | uuid:099de9c9-3e5e-427b-8452-26250e840d6e |

| 2 | 3 | God | 2016-11-17T00:00:00Z | 10 | 15 | burntbricks | 1 | NaN | NaN | 1 | solar_torch | 2 | Jan;Feb;Mar;Oct;Nov;Dec | uuid:193d7daf-9582-409b-bf09-027dd36f9007 |

| 3 | 4 | God | 2016-11-17T00:00:00Z | 7 | 6 | burntbricks | 1 | NaN | NaN | 2 | bicycle;radio;cow_plough;solar_panel;mobile_phone | 2 | Sept;Oct;Nov;Dec | uuid:148d1105-778a-4755-aa71-281eadd4a973 |

| 4 | 5 | God | 2016-11-17T00:00:00Z | 7 | 40 | burntbricks | 1 | NaN | NaN | 4 | motorcyle;radio;cow_plough;mobile_phone | 2 | Aug;Sept;Oct;Nov | uuid:2c867811-9696-4966-9866-f35c3e97d02d |

the inplace parameter of a pandas functions applies the operation to the DataFrame in memory, but then the function returns nothing, but if we display after that, we see that now the key_ID column is now the index.

safi_df.set_index('key_ID',inplace=True)

safi_df.head(2)

| village | interview_date | no_membrs | years_liv | respondent_wall_type | rooms | memb_assoc | affect_conflicts | liv_count | items_owned | no_meals | months_lack_food | instanceID | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| key_ID | |||||||||||||

| 1 | God | 2016-11-17T00:00:00Z | 3 | 4 | muddaub | 1 | NaN | NaN | 1 | bicycle;television;solar_panel;table | 2 | Jan | uuid:ec241f2c-0609-46ed-b5e8-fe575f6cefef |

| 1 | God | 2016-11-17T00:00:00Z | 7 | 9 | muddaub | 1 | yes | once | 3 | cow_cart;bicycle;radio;cow_plough;solar_panel;... | 2 | Jan;Sept;Oct;Nov;Dec | uuid:099de9c9-3e5e-427b-8452-26250e840d6e |

and if we describe again, we see it doesn’t compute on that column

safi_df.describe()

| no_membrs | years_liv | rooms | liv_count | no_meals | |

|---|---|---|---|---|---|

| count | 131.00000 | 131.000000 | 131.000000 | 131.000000 | 131.000000 |

| mean | 7.19084 | 23.053435 | 1.740458 | 2.366412 | 2.603053 |

| std | 3.17227 | 16.913041 | 1.092547 | 1.082775 | 0.491143 |

| min | 2.00000 | 1.000000 | 1.000000 | 1.000000 | 2.000000 |

| 25% | 5.00000 | 12.000000 | 1.000000 | 1.000000 | 2.000000 |

| 50% | 7.00000 | 20.000000 | 1.000000 | 2.000000 | 3.000000 |

| 75% | 9.00000 | 27.500000 | 2.000000 | 3.000000 | 3.000000 |

| max | 19.00000 | 96.000000 | 8.000000 | 5.000000 | 3.000000 |

We can also call any of those on one column or one statistic.

safi_df['rooms'].mean()

1.7404580152671756

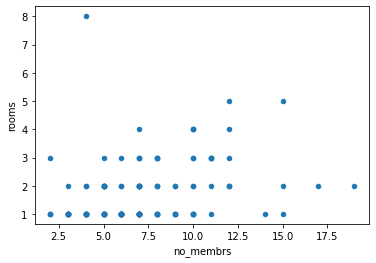

Pandas also has some built in plotting functions.

safi_df.plot.scatter('no_membrs','rooms')

<AxesSubplot:xlabel='no_membrs', ylabel='rooms'>

After Class Questions¶

How can we clean data? what other topics will we cover in this class?

Great Question! We’ll get to data cleaning soon. See the Schedule.

How does the syntax on the question from prismia today work?

The question was:

Read the tables off of the syllabus course map page with

read_htmland make a list of the shapes of all of the tables on the page. Save the output to a variable and paste the value of that variable as your answer to the question.

And the solution was:

This uses something called a list comprehension, they are a way to make lists, that look a lot like putting a for loop in a list. There are two ways to use them under the hood, as in the linked documentation, the one above is considered more pythonic, because it is more concise.

The [] define a list, we can do that with anything, for example my zoom meetings today were:

Note that the last line is there to display what happened, but it would work without.

We could get the same output using append and a regular for loop. That syntax would look like:

Where I can go to find a list of all the syntax.

A list of all the syntax might be hard to find, but the course textbook is free online with a lot of reference material in it.

A lot of what we did today is in Data Indexing and Selection

Also, the class notes are listed after each class on this website.

Also, you can get help from within a notebook and the pandas User guide has full details.

More Practice¶

These additional questions are for if you want more practice with things we’ve done this week, before class next week.

Which of the following is a dictionary?

Check using the type function. We can go further and build a list of them to display the one that’s a dictionary.

First, we can look at them

Then we can check the types and find the dictionary

What type is the shape of a pandas DataFrame?

We can find that by using the type function:

tuple is another iterable type, so we can index them

Also, in python, we can assign to multiple values when it returns a tuple. We saw this before, when we used the items() method on a dictionary.

we can see that with the type function

So, with the shape property we could also return it to two values

What does indexing with -1 do?

It returns the last value, here’s an example, using the todays_meetings variable defined above.

Further Reading¶

If you’ve made it this far, let me know how you found these notes.