Learning Curves

Contents

29. Learning Curves#

import matplotlib.pyplot as plt

import numpy as np

import seaborn as sns

import pandas as pd

from sklearn import datasets

from sklearn import cluster

from sklearn import naive_bayes

from sklearn import svm

from sklearn import tree

# import the whole model selection module

from sklearn import model_selection

sns.set_theme(palette='colorblind')

Today, we’ll load a new dataset and use the default sklearn data structure for datasets. We get back the default data stucture when we use a load_ function without any parameters at all.

digits = datasets.load_digits()

This shows us that the type is defined by sklearn and they called it bunch:

type(digits)

sklearn.utils._bunch.Bunch

We can print it out to begin exploring it.

digits

{'data': array([[ 0., 0., 5., ..., 0., 0., 0.],

[ 0., 0., 0., ..., 10., 0., 0.],

[ 0., 0., 0., ..., 16., 9., 0.],

...,

[ 0., 0., 1., ..., 6., 0., 0.],

[ 0., 0., 2., ..., 12., 0., 0.],

[ 0., 0., 10., ..., 12., 1., 0.]]),

'target': array([0, 1, 2, ..., 8, 9, 8]),

'frame': None,

'feature_names': ['pixel_0_0',

'pixel_0_1',

'pixel_0_2',

'pixel_0_3',

'pixel_0_4',

'pixel_0_5',

'pixel_0_6',

'pixel_0_7',

'pixel_1_0',

'pixel_1_1',

'pixel_1_2',

'pixel_1_3',

'pixel_1_4',

'pixel_1_5',

'pixel_1_6',

'pixel_1_7',

'pixel_2_0',

'pixel_2_1',

'pixel_2_2',

'pixel_2_3',

'pixel_2_4',

'pixel_2_5',

'pixel_2_6',

'pixel_2_7',

'pixel_3_0',

'pixel_3_1',

'pixel_3_2',

'pixel_3_3',

'pixel_3_4',

'pixel_3_5',

'pixel_3_6',

'pixel_3_7',

'pixel_4_0',

'pixel_4_1',

'pixel_4_2',

'pixel_4_3',

'pixel_4_4',

'pixel_4_5',

'pixel_4_6',

'pixel_4_7',

'pixel_5_0',

'pixel_5_1',

'pixel_5_2',

'pixel_5_3',

'pixel_5_4',

'pixel_5_5',

'pixel_5_6',

'pixel_5_7',

'pixel_6_0',

'pixel_6_1',

'pixel_6_2',

'pixel_6_3',

'pixel_6_4',

'pixel_6_5',

'pixel_6_6',

'pixel_6_7',

'pixel_7_0',

'pixel_7_1',

'pixel_7_2',

'pixel_7_3',

'pixel_7_4',

'pixel_7_5',

'pixel_7_6',

'pixel_7_7'],

'target_names': array([0, 1, 2, 3, 4, 5, 6, 7, 8, 9]),

'images': array([[[ 0., 0., 5., ..., 1., 0., 0.],

[ 0., 0., 13., ..., 15., 5., 0.],

[ 0., 3., 15., ..., 11., 8., 0.],

...,

[ 0., 4., 11., ..., 12., 7., 0.],

[ 0., 2., 14., ..., 12., 0., 0.],

[ 0., 0., 6., ..., 0., 0., 0.]],

[[ 0., 0., 0., ..., 5., 0., 0.],

[ 0., 0., 0., ..., 9., 0., 0.],

[ 0., 0., 3., ..., 6., 0., 0.],

...,

[ 0., 0., 1., ..., 6., 0., 0.],

[ 0., 0., 1., ..., 6., 0., 0.],

[ 0., 0., 0., ..., 10., 0., 0.]],

[[ 0., 0., 0., ..., 12., 0., 0.],

[ 0., 0., 3., ..., 14., 0., 0.],

[ 0., 0., 8., ..., 16., 0., 0.],

...,

[ 0., 9., 16., ..., 0., 0., 0.],

[ 0., 3., 13., ..., 11., 5., 0.],

[ 0., 0., 0., ..., 16., 9., 0.]],

...,

[[ 0., 0., 1., ..., 1., 0., 0.],

[ 0., 0., 13., ..., 2., 1., 0.],

[ 0., 0., 16., ..., 16., 5., 0.],

...,

[ 0., 0., 16., ..., 15., 0., 0.],

[ 0., 0., 15., ..., 16., 0., 0.],

[ 0., 0., 2., ..., 6., 0., 0.]],

[[ 0., 0., 2., ..., 0., 0., 0.],

[ 0., 0., 14., ..., 15., 1., 0.],

[ 0., 4., 16., ..., 16., 7., 0.],

...,

[ 0., 0., 0., ..., 16., 2., 0.],

[ 0., 0., 4., ..., 16., 2., 0.],

[ 0., 0., 5., ..., 12., 0., 0.]],

[[ 0., 0., 10., ..., 1., 0., 0.],

[ 0., 2., 16., ..., 1., 0., 0.],

[ 0., 0., 15., ..., 15., 0., 0.],

...,

[ 0., 4., 16., ..., 16., 6., 0.],

[ 0., 8., 16., ..., 16., 8., 0.],

[ 0., 1., 8., ..., 12., 1., 0.]]]),

'DESCR': ".. _digits_dataset:\n\nOptical recognition of handwritten digits dataset\n--------------------------------------------------\n\n**Data Set Characteristics:**\n\n :Number of Instances: 1797\n :Number of Attributes: 64\n :Attribute Information: 8x8 image of integer pixels in the range 0..16.\n :Missing Attribute Values: None\n :Creator: E. Alpaydin (alpaydin '@' boun.edu.tr)\n :Date: July; 1998\n\nThis is a copy of the test set of the UCI ML hand-written digits datasets\nhttps://archive.ics.uci.edu/ml/datasets/Optical+Recognition+of+Handwritten+Digits\n\nThe data set contains images of hand-written digits: 10 classes where\neach class refers to a digit.\n\nPreprocessing programs made available by NIST were used to extract\nnormalized bitmaps of handwritten digits from a preprinted form. From a\ntotal of 43 people, 30 contributed to the training set and different 13\nto the test set. 32x32 bitmaps are divided into nonoverlapping blocks of\n4x4 and the number of on pixels are counted in each block. This generates\nan input matrix of 8x8 where each element is an integer in the range\n0..16. This reduces dimensionality and gives invariance to small\ndistortions.\n\nFor info on NIST preprocessing routines, see M. D. Garris, J. L. Blue, G.\nT. Candela, D. L. Dimmick, J. Geist, P. J. Grother, S. A. Janet, and C.\nL. Wilson, NIST Form-Based Handprint Recognition System, NISTIR 5469,\n1994.\n\n.. topic:: References\n\n - C. Kaynak (1995) Methods of Combining Multiple Classifiers and Their\n Applications to Handwritten Digit Recognition, MSc Thesis, Institute of\n Graduate Studies in Science and Engineering, Bogazici University.\n - E. Alpaydin, C. Kaynak (1998) Cascading Classifiers, Kybernetika.\n - Ken Tang and Ponnuthurai N. Suganthan and Xi Yao and A. Kai Qin.\n Linear dimensionalityreduction using relevance weighted LDA. School of\n Electrical and Electronic Engineering Nanyang Technological University.\n 2005.\n - Claudio Gentile. A New Approximate Maximal Margin Classification\n Algorithm. NIPS. 2000.\n"}

We note that it has key value pairs, and that the last one is called DESCR and is text that describes the data. If we send that to the print function it will be formatted more readably.

print(digits['DESCR'])

.. _digits_dataset:

Optical recognition of handwritten digits dataset

--------------------------------------------------

**Data Set Characteristics:**

:Number of Instances: 1797

:Number of Attributes: 64

:Attribute Information: 8x8 image of integer pixels in the range 0..16.

:Missing Attribute Values: None

:Creator: E. Alpaydin (alpaydin '@' boun.edu.tr)

:Date: July; 1998

This is a copy of the test set of the UCI ML hand-written digits datasets

https://archive.ics.uci.edu/ml/datasets/Optical+Recognition+of+Handwritten+Digits

The data set contains images of hand-written digits: 10 classes where

each class refers to a digit.

Preprocessing programs made available by NIST were used to extract

normalized bitmaps of handwritten digits from a preprinted form. From a

total of 43 people, 30 contributed to the training set and different 13

to the test set. 32x32 bitmaps are divided into nonoverlapping blocks of

4x4 and the number of on pixels are counted in each block. This generates

an input matrix of 8x8 where each element is an integer in the range

0..16. This reduces dimensionality and gives invariance to small

distortions.

For info on NIST preprocessing routines, see M. D. Garris, J. L. Blue, G.

T. Candela, D. L. Dimmick, J. Geist, P. J. Grother, S. A. Janet, and C.

L. Wilson, NIST Form-Based Handprint Recognition System, NISTIR 5469,

1994.

.. topic:: References

- C. Kaynak (1995) Methods of Combining Multiple Classifiers and Their

Applications to Handwritten Digit Recognition, MSc Thesis, Institute of

Graduate Studies in Science and Engineering, Bogazici University.

- E. Alpaydin, C. Kaynak (1998) Cascading Classifiers, Kybernetika.

- Ken Tang and Ponnuthurai N. Suganthan and Xi Yao and A. Kai Qin.

Linear dimensionalityreduction using relevance weighted LDA. School of

Electrical and Electronic Engineering Nanyang Technological University.

2005.

- Claudio Gentile. A New Approximate Maximal Margin Classification

Algorithm. NIPS. 2000.

This tells us that we are going to be predicting what digit (0,1,2,3,4,5,6,7,8, or 9) is in the image.

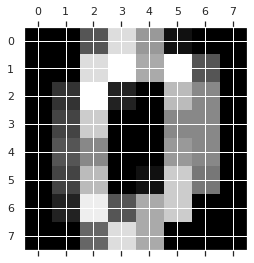

To get an idea of what the images look like, we can use matshow which is short for matrix show. It takes a 2D matrix and plots it as a grayscale image. To get the actual color bar, we use the matplotlib plt.gray().

plt.gray()

plt.matshow(digits.images[0])

<matplotlib.image.AxesImage at 0x7ff4b57fdfa0>

<Figure size 432x288 with 0 Axes>

bunch objects are designed for machine learning, so they have the features as “data” and target explicitly identified.

digits_X = digits.data

digits_y = digits.target

We can further check the type and shape of these

type(digits_y)

numpy.ndarray

Note

because this is the target, it’s okay that this is one dimensional.

digits_y.shape

(1797,)

digits_X.shape

(1797, 64)

This has one row for each sample and has reshaped the 8x8 image into a 64 length vector. So we have one ‘feature’ for each pixel in the images.

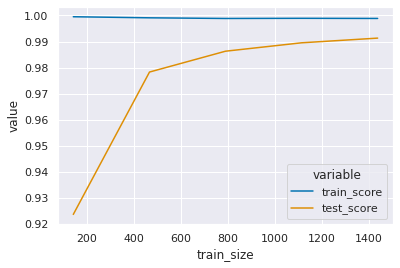

We are going to do some model comparison, so we will instantiate estimator objects for two different classifiers.

svm_clf = svm.SVC(gamma=0.001)

gnb_clf = naive_bayes.GaussianNB()

We’re going to use a ShuffleSplit object to do Cross validation with 100 iterations to get smoother mean test and train score curves, each time with 20% data randomly selected as a validation set.

Further Reading

You can see visualization of different cross validation types in the sklearn documentation.

cv = model_selection.ShuffleSplit(n_splits=100, test_size=0.2, random_state=0)

Note

This object has a random_state object, the GridSearchCV that we were using didn’t have a way to control the random state directly, but it accepts not only integers, but also cross validation objects to the cv parameter. The KFold cross validation object also has that parameter, so we could repeat what we did in previous classes by creating a KFold object with a fixed random state.

We’ll also create a linearly spaced list of training percentages and we’ll also divide it into more jobs to be more computationally efficient.

train_sizes=np.linspace(0.1, 1.0, 5)

n_jobs=4

Try it yourself

Try varying the n_jobs parameter and tmiing the execution using the

timit magic

Now we can create the learning curve.

train_sizes_svm, train_scores_svm, test_scores_svm, fit_times_svm, score_times_svm = model_selection.learning_curve(

svm_clf,

digits_X,

digits_y,

cv=cv,

n_jobs=n_jobs,

train_sizes=train_sizes,

return_times=True,)

It returns the list of the counts for each training size (we input percentages and it returns counts)

train_sizes_svm

array([ 143, 467, 790, 1113, 1437])

The other parameters, it returns a list for each length that’s 100 long because our cross validation was 100 iterations.

fit_times_svm.shape

(5, 100)

We can save it in a DataFrame after averaging over the 100 trials.

svm_learning_df = pd.DataFrame(data = train_sizes_svm, columns = ['train_size'])

# svm_learning_df['train_size'] = train_sizes_svm

svm_learning_df['train_score'] = np.mean(train_scores_svm,axis=1)

svm_learning_df['test_score'] = np.mean(test_scores_svm,axis=1)

svm_learning_df['fit_time'] = np.mean(fit_times_svm,axis=1)

svm_learning_df['score_times'] = np.mean(score_times_svm,axis=1)

then we can look at the DataFrame

svm_learning_df.head()

| train_size | train_score | test_score | fit_time | score_times | |

|---|---|---|---|---|---|

| 0 | 143 | 0.999510 | 0.923611 | 0.005320 | 0.007160 |

| 1 | 467 | 0.999101 | 0.978278 | 0.025563 | 0.019791 |

| 2 | 790 | 0.998848 | 0.986278 | 0.048724 | 0.027512 |

| 3 | 1113 | 0.998913 | 0.989500 | 0.077369 | 0.034811 |

| 4 | 1437 | 0.998859 | 0.991306 | 0.107892 | 0.041552 |

We can use our skills in transforming data to make it easier to exmine just a subset of the scores.

svm_learning_df_scores = svm_learning_df.melt(id_vars=['train_size'],value_vars=['train_score','test_score'])

svm_learning_df_scores.head(2)

| train_size | variable | value | |

|---|---|---|---|

| 0 | 143 | train_score | 0.999510 |

| 1 | 467 | train_score | 0.999101 |

This new DataFrame allows us to make convenient plots.

sns.lineplot(data = svm_learning_df_scores, x ='train_size', y='value',hue='variable')

<AxesSubplot:xlabel='train_size', ylabel='value'>

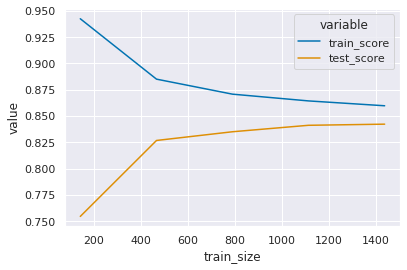

train_sizes_gnb, train_scores_gnb, test_scores_gnb, fit_times_gnb, score_times_gnb = model_selection.learning_curve(

gnb_clf,

digits_X,

digits_y,

cv=cv,

n_jobs=n_jobs,

train_sizes=train_sizes,

return_times=True,)

We can do the same for Gaussian Naive Bayes

gnb_learning_df = pd.DataFrame(data = train_sizes_gnb, columns = ['train_size'])

# gnb_learning_df['train_size'] = train_sizes_gnb

gnb_learning_df['train_score'] = np.mean(train_scores_gnb,axis=1)

gnb_learning_df['test_score'] = np.mean(test_scores_gnb,axis=1)

gnb_learning_df['fit_time'] = np.mean(fit_times_gnb,axis=1)

gnb_learning_df['score_times_gnb'] = np.mean(score_times_gnb,axis=1)

gnb_learning_scores = gnb_learning_df.melt(id_vars=['train_size'],value_vars=['train_score','test_score'])

sns.lineplot(data = gnb_learning_scores, x ='train_size', y='value',hue='variable')

<AxesSubplot:xlabel='train_size', ylabel='value'>

These scores are overall not as high as the SVM (note the values on the y axis. )

29.1. Questions After Class#

29.1.1. When would I use an SVM?#

SVMs are a very powerful model and they’re good when you need relatively quick training (and test) time, a model that takes a small amount of memory (eg running the prediction on a mobile device or smart device), with high accuracy.